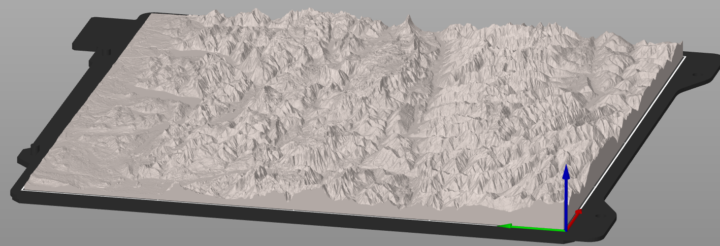

What if your 3D printer could do more than print toys, vases, or endless test cubes? What if it became your personal tool for repairing, improving, and customizing the world around you—saving money, reducing waste, and solving everyday problems in creative, elegant ways? Within this post I will show how to print your own, personalized […]

3D Print Personalized QR Linkedin Badges