Providing human support engineers to handle incoming queries about products and services can be both costly and limited in its scalability. This is particularly challenging for self-published mobile apps and small to medium-sized businesses, as they often lack the financial resources to offer human support.

I personally experienced this issue when I published my own mobile app in various app stores. The revenue generated by the app was insufficient to cover the expenses of hiring a support engineer. As a result, I had to handle all the email inquiries myself, which significantly impeded my ability to focus on product innovation. I found myself repeatedly addressing the same questions as the number of app users grew, and the volume of daily email inquiries increased substantially.

It became evident that this approach was not sustainable. Today’s users have high expectations for support, even when using free products or services. They expect prompt and accurate responses to their inquiries.

Given this predicament, I started searching for a solution that would allow me to quickly address basic questions about my product. This is when I implemented InqueryIQ, a cutting-edge platform that combines the power of the latest generative OpenAI model with customizable product context. It seamlessly integrates with an existing support email inbox.

InqueryIQ is essentially a product email support container that utilizes state-of-the-art generative AI from OpenAI to automatically respond to email inquiries related to any configurable product or service. The fully autonomous generative AI support agent learns from individual users’ chat history and promptly handles all product or service-related email inquiries.

What sets InqueryIQ apart is its high level of configurability, allowing it to be tailored precisely to your specific product or service. It efficiently and flexibly addresses all email support inquiries, making it an invaluable tool for businesses seeking to enhance their customer support capabilities.

How to run InqueryIQ?

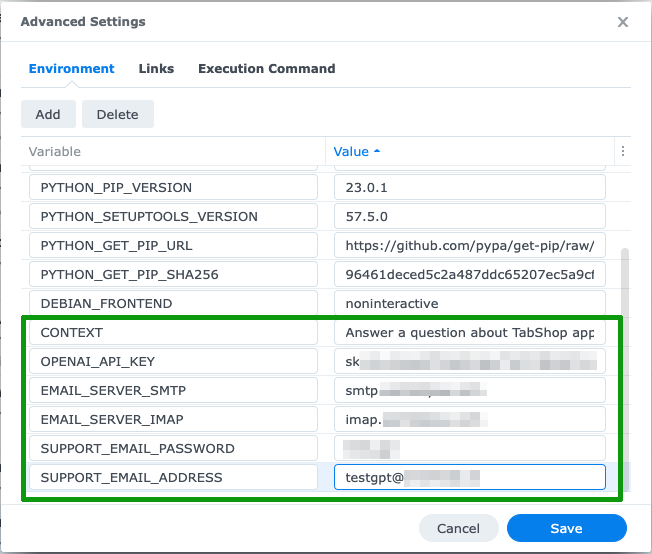

All you need to do to run your own customized InqueryIQ AI email support agent is to pull the Docker image from Dockerhub and to customize the instance with your own email server, email support address and credentials as well as with your own OpenAI API key.

You configure those parameters by setting environment variables during the startup of your own InqueryIQ docker container, as it is shown below:

Besides configuring all the mandatory parameters, you can also adapt the optional parameters for controlling the conversation and for keeping the OpenAI cost in terms of token usage under control.

Refer to the list of all configurable environment parameters below:

Mandatory email and OpenAI settings

- EMAIL_SERVER_IMAP: (Mandatory) Support email IMAP server url

- EMAIL_SERVER_SMTP: (Mandatory) Support email SMTP server url

- SUPPORT_EMAIL_ADDRESS: (Mandatory) Support email

- SUPPORT_EMAIL_PASSWORD: (Mandatory) Support email password

- OPENAI_API_KEY: (Mandatory) OpenAI API key

Optional conversational settings

- CONTEXT: (Optional, default: empty) Product context in short precise terms language agnostic if possible, e.g.: ‘Answer a question about TabShop app’

- MAX_PROMPT_TOKENS: (Optional, default: 200) Prompt token limit to keep cost under control.

- RESPONSE_TOKENS: (Optional, default: 200) Response token limit to keep cost under control.

- MAX_REMEMBERED_MESSAGES: (Optional, default: 5) The maximum of conversation messages the support agent remembers and uses within the OpenAI prompt. Mind that a longer history means a much higher token use and OpenAI cost.

How does InqueryIQ work?

InqueryIQ operates by first accessing the credentials associated with your designated support email address and retrieving all the newly received emails.

Subsequently, it combines the configured product context, previous inquiry messages, and the email’s title and body. This consolidated text is then forwarded to the OpenAI generative AI SaaS service.

The OpenAI generative SaaS service processes the provided inquiry prompt, taking into consideration the historical conversation with the specific email sender. InqueryIQ then receives the generated response from OpenAI and sends it back as an answer to the user’s email inquiry.

It’s worth noting that OpenAI retains the previous messages per email sender to ensure that the InquiryIQ agent remains contextually aware.

Keep in mind that restarting the Docker container will also clear the conversation history, starting anew with each container session.

InqueryIQ in action

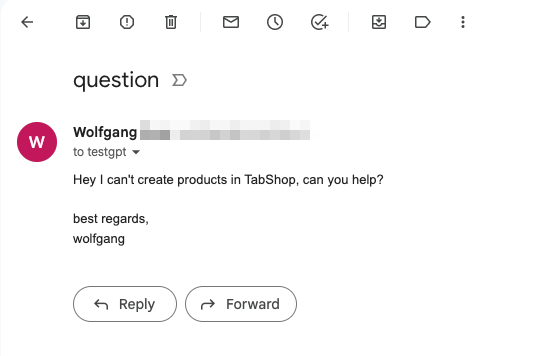

During testing, I established a distinct email address solely for communication with the InqueryIQ support agent, ensuring it doesn’t disrupt regular support email channels.

To maintain transparency, it’s highly advisable to create a dedicated email address for your AI support agent and inform users that they will be interacting with a machine, not a human support engineer.

With the email address in place and the product context set as “Answer a question about TabShop android app,” I successfully sent product inquiries to the InqueryIQ email address and received a remarkably satisfactory response, as demonstrated below:

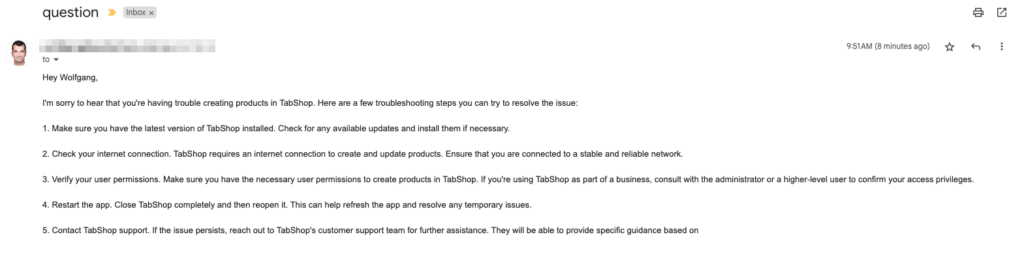

The InqueryIQ response email was received within a minute and it contained a lot of useful hints to overcome the issue:

Summary

Leveraging OpenAI generative AI models to address support inquiries for your products and services presents a valuable opportunity, particularly for small and medium-sized businesses that may not have the resources to afford a human support agent. Not only does this approach enable business scalability, but it also provides customers with prompt and contextually relevant responses. Instead of waiting for days to receive assistance from an overwhelmed human support representative, customers can now receive immediate and helpful answers, enhancing their overall experience with your company.